United States (EN)

Select your region or country.

Evolution of adaptive optics devices with improved wavefront sensor performance

Published on October 16, 2025

There are several methods for observing exoplanets, and about 4000 exoplanets have been discovered so far using these methods. Of these, only around 10 to 20 exoplanets have been confirmed by “direct imaging” with an observation device using a telescope. The main mission of our research is to improve the accuracy of direct imaging observation equipment and capture the light emitted by as many planets as possible.

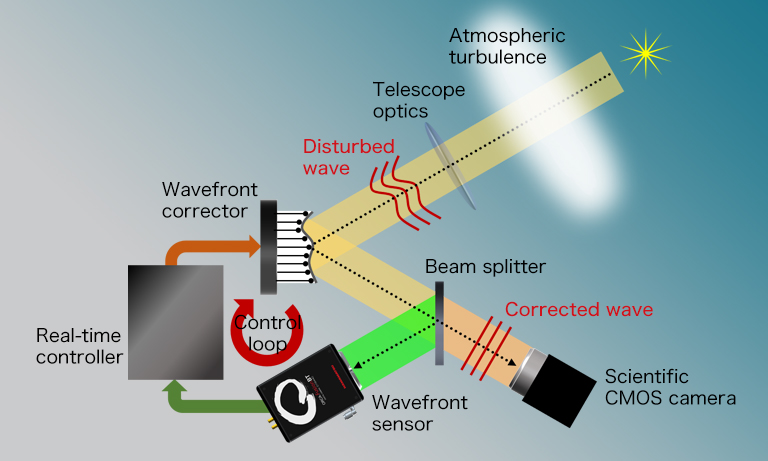

Adaptive optics is a technique that immediately corrects a wavefront disturbed by atmospheric fluctuations and obtains the clearest star image without distortion within the performance limits of a telescope. It is also a key component of the observation device.

In order to achieve real-time and highly accurate wavefront correction, high-speed readout performance and high resolution are required. In addition, sometimes wavefront correction is performed in situations where the number of photons is very small, such as darker celestial bodies and laser artificial stars, which requires high sensitivity in the camera.

What is needed to improve performance, and what will be achieved once the ultimate performance is reached? We asked Dr. Kodai Yamamoto of the Astronomical Observatory, Graduate School of Science, Kyoto University, who is working on direct imaging of exoplanets using a giant telescope, about the current performance of adaptive optics and future prospects of using them.

- The desire to directly image the actual light emitted by planets using telescopic observation equipment

- Lay the foundation for direct imaging of planets with a large telescope

- Improving the performance of wave-front sensors is the key to evolution

- Search for life outside of Earth

- Aiming for a device that eliminates the effects of turbulence

- Cameras are also an important factor in improving device performance

- Researcher profile

The desire to directly image the actual light emitted by planets using telescopic observation equipment

-Can you tell us about the current status of planetary observation using adaptive optics?

We are currently building an observation device to be installed on the newly built “Seimei”, the largest telescope in Japan, in Okayama Prefecture. This device will be used for direct imaging of exoplanets.

The world's first direct optical detection of an exoplanet occurred in around 2008. Since that time only 1nly 1nly 10-20 others have been confirmed this way, out of around 4000 exoplanet discoveries. So the fact that we have directly imaged only 10 to 20 planets means that the detected number of planets is very small.

The reason for the small number is that the performance of observation devices for direct imaging is still lacking. However, an additional challenge is that exoplanets are very dark objects located near fixed stars that emit their own light, so it is very difficult to detect their light.

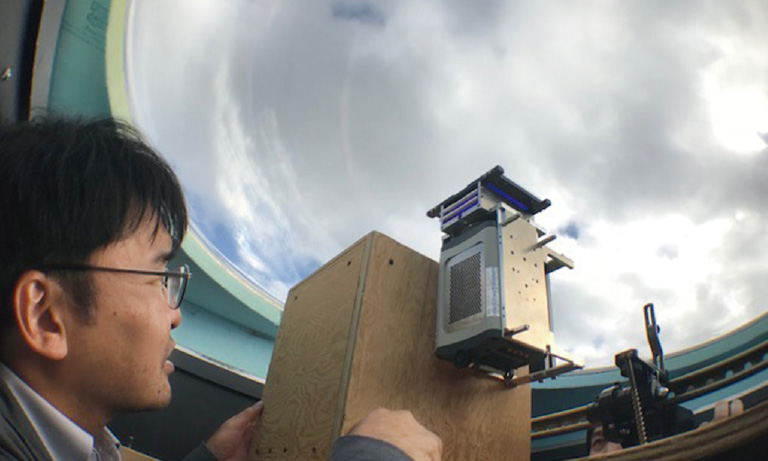

We have developed an exoplanet imager “Second-generation Exoplanet Imager with Coronagraphic AO (SEICA)” for the previously mentioned telescope “Seimei” and are trying to find darker celestial bodies. However, we have not yet achieved the performance needed to detect undiscovered planets.

Some of the world’s largest telescopes, such as the Subaru Telescope and the VLT in Chile, have apertures of 8 m to 10 m, and high detection performance. Since the detection performance of a telescope increases with its size, the performance of “Seimei”, whose aperture is less than half of those larger ones, is inferior. Although the performance of observation equipment is getting better and better, it is still not good enough to detect very dark objects near fixed stars.

Lay the foundation for direct imaging of planets with a large telescope

-The adaptive optics device “SEICA” was developed to find exoplanets by direct imaging. I’m sure that further performance improvements will be required in the future. But what kind of results do you expect to achieve by improving its performance?

There are two goals for “SEICA”. One is to detect exoplanets, and the other is to serve as a test-bed for technologies that can be applied to large telescopes in the future.

As mentioned earlier, when trying to develop a new technology it is actually very difficult because the target is dark and very close to a fixed star. This is not the only reason why it is difficult to observe. Another major influence on the quality of an image is the atmosphere. When the atmosphere is disturbed, the image is distorted in the form of the so-called blinking of stars, and the outline of the image is blurred or shifted, making it difficult to obtain a clear image. Adaptive optics have been developed to counteract the effects of such atmospheric turbulence.

While our main goal is to elucidate the actual state of planets by direct imaging of planets, we also hope that our research will result in improving the performance of the adaptive optics system and laying the foundation for direct imaging of planets in the future with a large optical telescope equipped with adaptive optics.

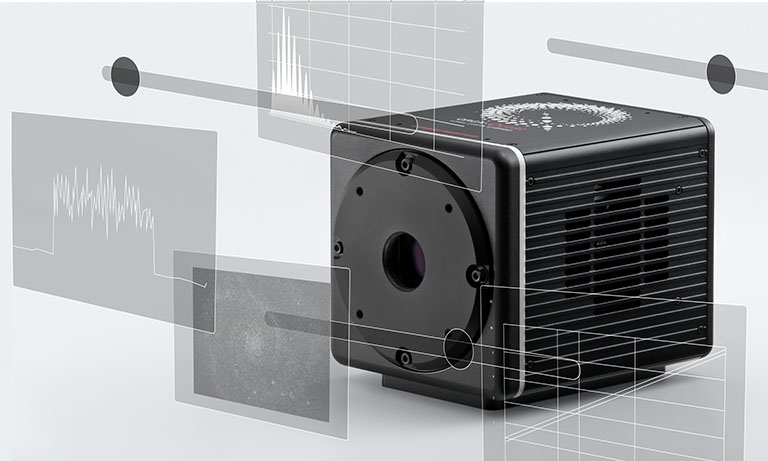

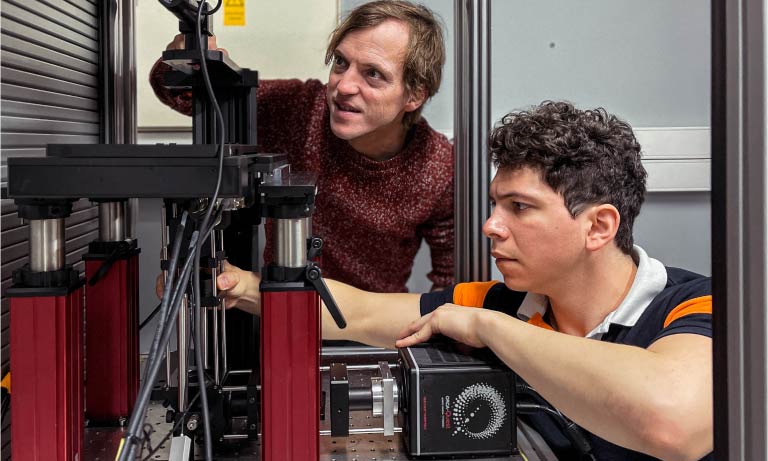

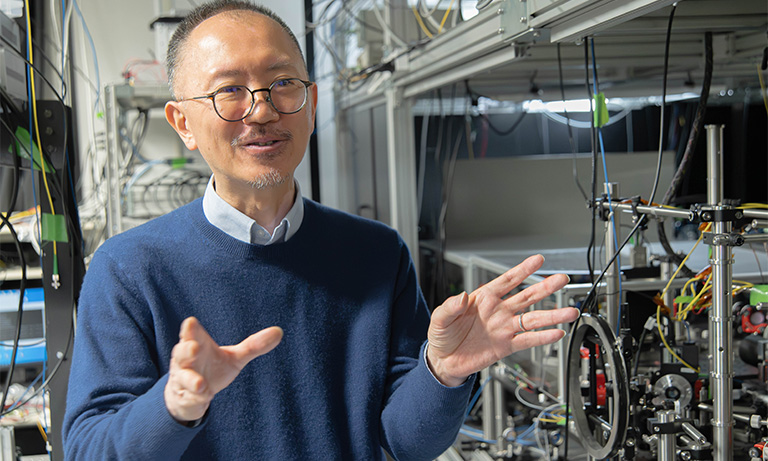

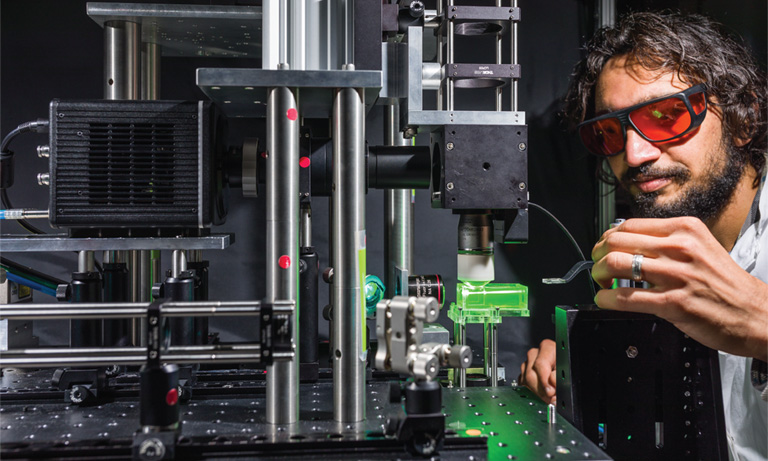

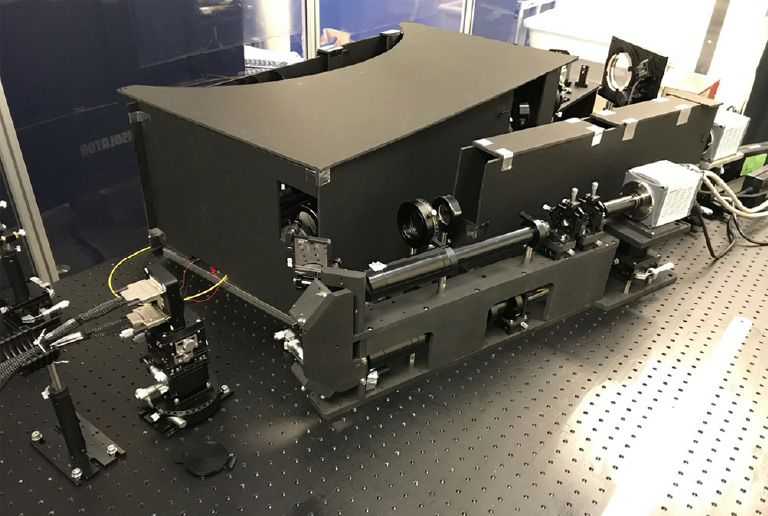

Adaptive optics device “SEICA”

Improving the performance of wave-front sensors is the key to evolution

-I have heard that improving the performance of adaptive optics is essential for achieving the first goal, “Detection of exoplanets”. What is the most necessary thing for that?

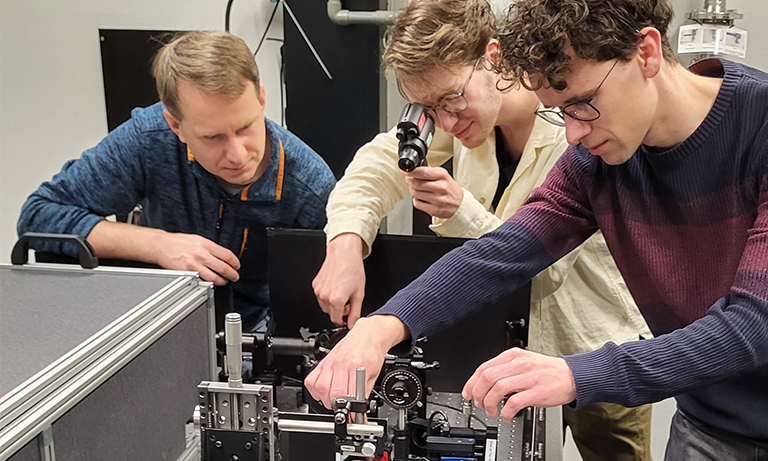

Among adaptive optics devices is an extreme adaptive optics device called “Extreme AO”. The Extreme AO is also used in the Subaru Telescope, but the one we have developed uses an FPGA to control the wavefront more quickly and precisely. The reason why we are pursuing such high-precision wavefront control is that, again, how to cancel out atmospheric turbulence is directly related to the accuracy of imaging.

In fact, the shape of the atmosphere itself does not change so much. If you look at it at a frequency of about 1 kHz or 6.5 kHz, it keeps almost the same shape, but when the wind blows, it gets swept away. So the wavefront shape you are watching thinking “this is it” will drift away the next moment and you will be looking at another shape. Therefore, the time-delay between measurement and correction appears as a shape of error. Strictly speaking, it not only corrects while measuring the wavefront in real time, but also controls by combining a control-algorithm that predicts and corrects the wavefront ahead of the wind.

The conventional “tilt-measurement” wavefront sensors check the shape of a wavefront by looking at the inclination of each point on the wavefront and integrating it back to the original shape, but because of the integral process, measurement errors at each point propagate to the overall shape measurement. However, with the “phase measurement” used in our adaptive optics equipment, you can directly measure “how high is this position on the wavefront.”

As the phase can be measured directly, errors are less likely to occur. Since adaptive optics for direct imaging of exoplanets requires high-speed and high-precision wavefront control, so does wavefront measurement. Improving the performance of wavefront sensors is essential for the evolution of adaptive optics.

Search for life outside of Earth

-I would like to ask you in detail more about the second question, “application to large telescopes”. Furthermore, can you tell us about your ideas as you pursue your research?

At the level of studying exoplanets, I’m sure that what researchers think is the ultimate is “searching for life in places other than the earth.” Of course, it is absolutely impossible to optically find life on the surface of a planet, so I think there is a desire to properly investigate things such as the appearance of plants, the presence of oxygen, and the composition of the atmosphere, which would bring us a little closer to discovering life.

In other words, direct imaging means that you can do spectroscopy. If you can do spectroscopy, you can get crucial clues as to what kinds of molecules are present. I believe that the ultimate goal of our generation is to use direct imaging to get clues as to whether life exists or not, and if so, what kind of life it is.

Aiming for a device that eliminates the effects of turbulence

When we say “ultimate” in terms of device development, the ultimate goal is to create an adaptive optics device that completely eliminates the effects of atmospheric turbulence. Compared to the adaptive optics devices that existed about 20 years ago, the ones that are currently being developed have much better performance, and they are getting closer to the level of making a beautiful wavefront, but are still not the ultimate adaptive optics in the true sense. It requires faster measurement, computing, and correction. Without these pursuits, the ultimate goal cannot be achieved.

Cameras are also an important factor in improving device performance

-The fact that a high-speed and high-precision wavefront sensor is essential for faster calculation, computing, and correction means that the 2D sensor that captures images; that is, the camera side, is also required to have the same high level of performance, right?

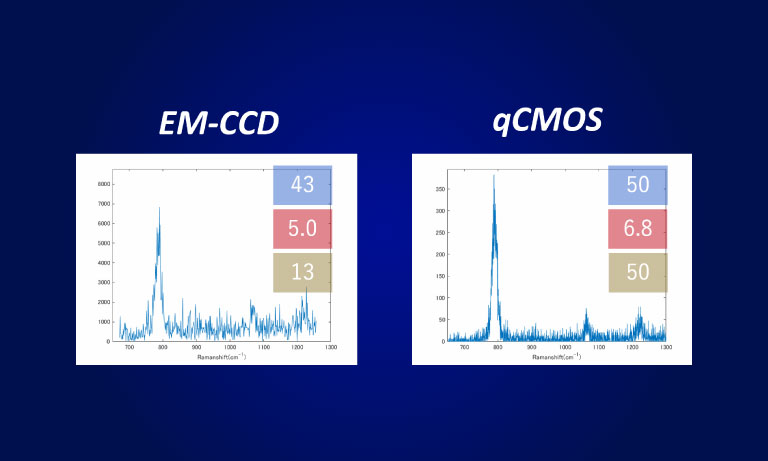

That’s right. After all, what we want most is high speed and low noise. As I said earlier, there is a delay in the time from measuring a wavefront to applying correction, and that delay causes a temporal error. So corrected wavefront images taken by the camera are almost speed limiting to it. The faster the acquisition time, the shorter the exposure time, and the number of photons that can be detected will decrease per frame. That’s why I said high speed and low noise, but there is a real trade-off between the two, so the faster you go, the higher the readout noise and photon noise will be, and in contrast, if you try to lower them, you will naturally see temporal errors in the image.

In order to resolve the trade-off between the two, predictive control has been introduced into the control algorithm. However, it is difficult to implement complex control because of the cost of the computation, both in terms of hardware and software. If you build a dedicated circuit from measurement to control, you can shorten the calculation time to the limit, but the dedicated circuit is expensive and not easy to modify. A general PC also has the advantage of being able to freely develop and change algorithms. Therefore, we have adopted an FPGA, which is an intermediate device between a PC and a dedicated circuit, as a control device.

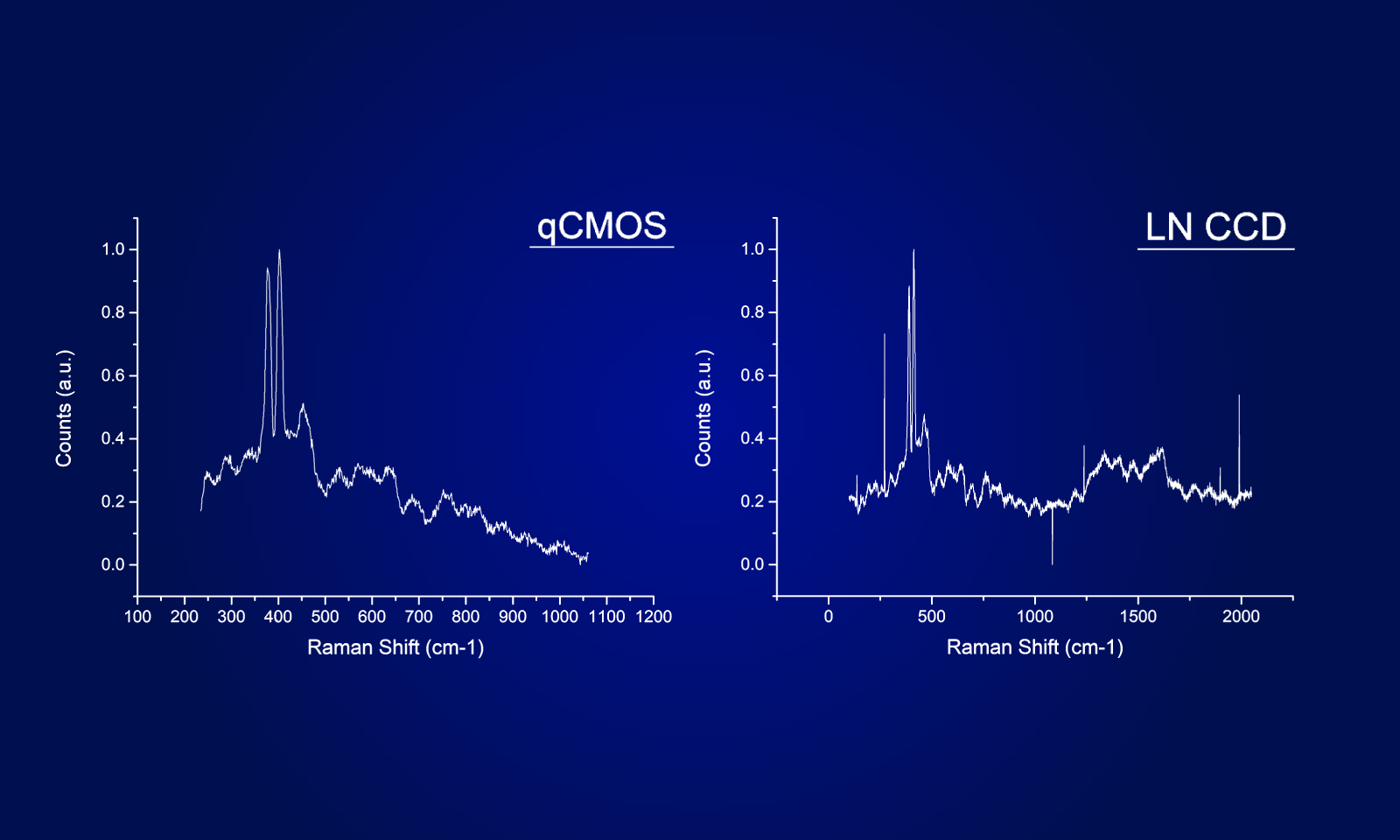

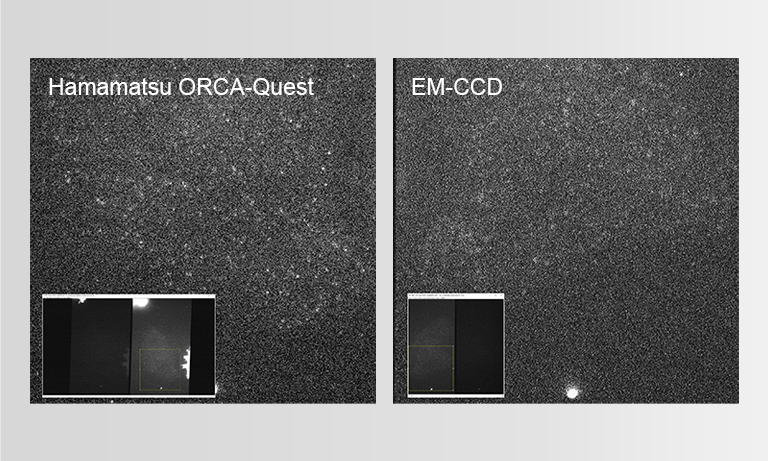

Even if the algorithm eliminates the trade-off, it is still very important to select a camera to be used for the wavefront sensor. We try to carefully select and adopt the one that has both high speed and low noise.

Researcher profile

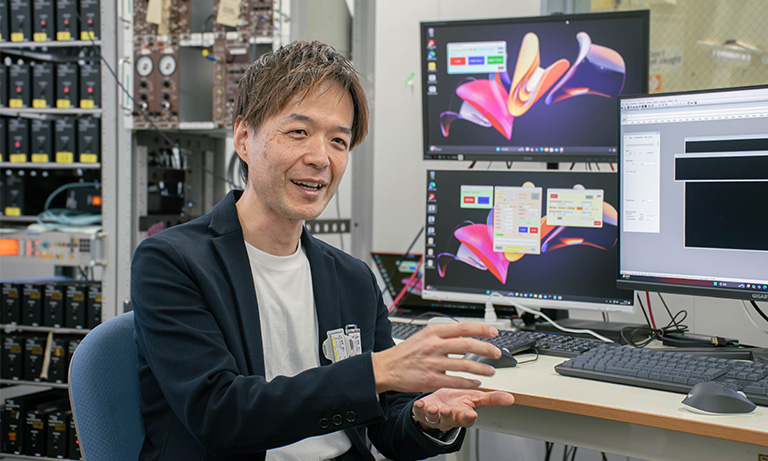

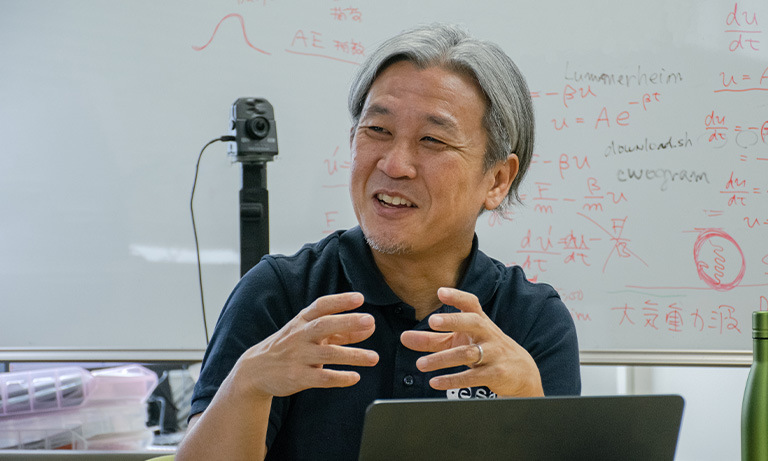

Kodai Yamamoto, Ph.D.

Researcher at the Astronomical Observatory of the Graduate School of Science, Kyoto University (inter-university collaboration).

Completed doctoral program at the Graduate School of Science, Osaka University in 2014.

Yamamoto specializes in infrared astronomy, and engages in direct imaging observations of extrasolar planets, and development of observation equipment.

*The interview was conducted in 2020.

Related product

Digital CMOS camera with sCMOS sensor designed for scientific research use. It has improved resolution and sensitivity (especially in NIR region) comparing with ORCA-Flash4.0. (82 % peak QE)

Other case studies

- Confirmation

-

It looks like you're in the . If this is not your location, please select the correct region or country below.

You're headed to Hamamatsu Photonics website for US (English). If you want to view an other country's site, the optimized information will be provided by selecting options below.

In order to use this website comfortably, we use cookies. For cookie details please see our cookie policy.

- Cookie Policy

-

This website or its third-party tools use cookies, which are necessary to its functioning and required to achieve the purposes illustrated in this cookie policy. By closing the cookie warning banner, scrolling the page, clicking a link or continuing to browse otherwise, you agree to the use of cookies.

Hamamatsu uses cookies in order to enhance your experience on our website and ensure that our website functions.

You can visit this page at any time to learn more about cookies, get the most up to date information on how we use cookies and manage your cookie settings. We will not use cookies for any purpose other than the ones stated, but please note that we reserve the right to update our cookies.

1. What are cookies?

For modern websites to work according to visitor’s expectations, they need to collect certain basic information about visitors. To do this, a site will create small text files which are placed on visitor’s devices (computer or mobile) - these files are known as cookies when you access a website. Cookies are used in order to make websites function and work efficiently. Cookies are uniquely assigned to each visitor and can only be read by a web server in the domain that issued the cookie to the visitor. Cookies cannot be used to run programs or deliver viruses to a visitor’s device.

Cookies do various jobs which make the visitor’s experience of the internet much smoother and more interactive. For instance, cookies are used to remember the visitor’s preferences on sites they visit often, to remember language preference and to help navigate between pages more efficiently. Much, though not all, of the data collected is anonymous, though some of it is designed to detect browsing patterns and approximate geographical location to improve the visitor experience.

Certain type of cookies may require the data subject’s consent before storing them on the computer.

2. What are the different types of cookies?

This website uses two types of cookies:

- First party cookies. For our website, the first party cookies are controlled and maintained by Hamamatsu. No other parties have access to these cookies.

- Third party cookies. These cookies are implemented by organizations outside Hamamatsu. We do not have access to the data in these cookies, but we use these cookies to improve the overall website experience.

3. How do we use cookies?

This website uses cookies for following purposes:

- Certain cookies are necessary for our website to function. These are strictly necessary cookies and are required to enable website access, support navigation or provide relevant content. These cookies direct you to the correct region or country, and support security and ecommerce. Strictly necessary cookies also enforce your privacy preferences. Without these strictly necessary cookies, much of our website will not function.

- Analytics cookies are used to track website usage. This data enables us to improve our website usability, performance and website administration. In our analytics cookies, we do not store any personal identifying information.

- Functionality cookies. These are used to recognize you when you return to our website. This enables us to personalize our content for you, greet you by name and remember your preferences (for example, your choice of language or region).

- These cookies record your visit to our website, the pages you have visited and the links you have followed. We will use this information to make our website and the advertising displayed on it more relevant to your interests. We may also share this information with third parties for this purpose.

Cookies help us help you. Through the use of cookies, we learn what is important to our visitors and we develop and enhance website content and functionality to support your experience. Much of our website can be accessed if cookies are disabled, however certain website functions may not work. And, we believe your current and future visits will be enhanced if cookies are enabled.

4. Which cookies do we use?

There are two ways to manage cookie preferences.

- You can set your cookie preferences on your device or in your browser.

- You can set your cookie preferences at the website level.

If you don’t want to receive cookies, you can modify your browser so that it notifies you when cookies are sent to it or you can refuse cookies altogether. You can also delete cookies that have already been set.

If you wish to restrict or block web browser cookies which are set on your device then you can do this through your browser settings; the Help function within your browser should tell you how. Alternatively, you may wish to visit www.aboutcookies.org, which contains comprehensive information on how to do this on a wide variety of desktop browsers.

5. What are Internet tags and how do we use them with cookies?

Occasionally, we may use internet tags (also known as action tags, single-pixel GIFs, clear GIFs, invisible GIFs and 1-by-1 GIFs) at this site and may deploy these tags/cookies through a third-party advertising partner or a web analytical service partner which may be located and store the respective information (including your IP-address) in a foreign country. These tags/cookies are placed on both online advertisements that bring users to this site and on different pages of this site. We use this technology to measure the visitors' responses to our sites and the effectiveness of our advertising campaigns (including how many times a page is opened and which information is consulted) as well as to evaluate your use of this website. The third-party partner or the web analytical service partner may be able to collect data about visitors to our and other sites because of these internet tags/cookies, may compose reports regarding the website’s activity for us and may provide further services which are related to the use of the website and the internet. They may provide such information to other parties if there is a legal requirement that they do so, or if they hire the other parties to process information on their behalf.

If you would like more information about web tags and cookies associated with on-line advertising or to opt-out of third-party collection of this information, please visit the Network Advertising Initiative website http://www.networkadvertising.org.

6. Analytics and Advertisement Cookies

We use third-party cookies (such as Google Analytics) to track visitors on our website, to get reports about how visitors use the website and to inform, optimize and serve ads based on someone's past visits to our website.

You may opt-out of Google Analytics cookies by the websites provided by Google:

https://tools.google.com/dlpage/gaoptout?hl=en

As provided in this Privacy Policy (Article 5), you can learn more about opt-out cookies by the website provided by Network Advertising Initiative:

http://www.networkadvertising.org

We inform you that in such case you will not be able to wholly use all functions of our website.

Close